Revamp

Enhancing Accessible Information Seeking Experience of Online Shopping for Blind or Low Vision Users

ABSTRACT

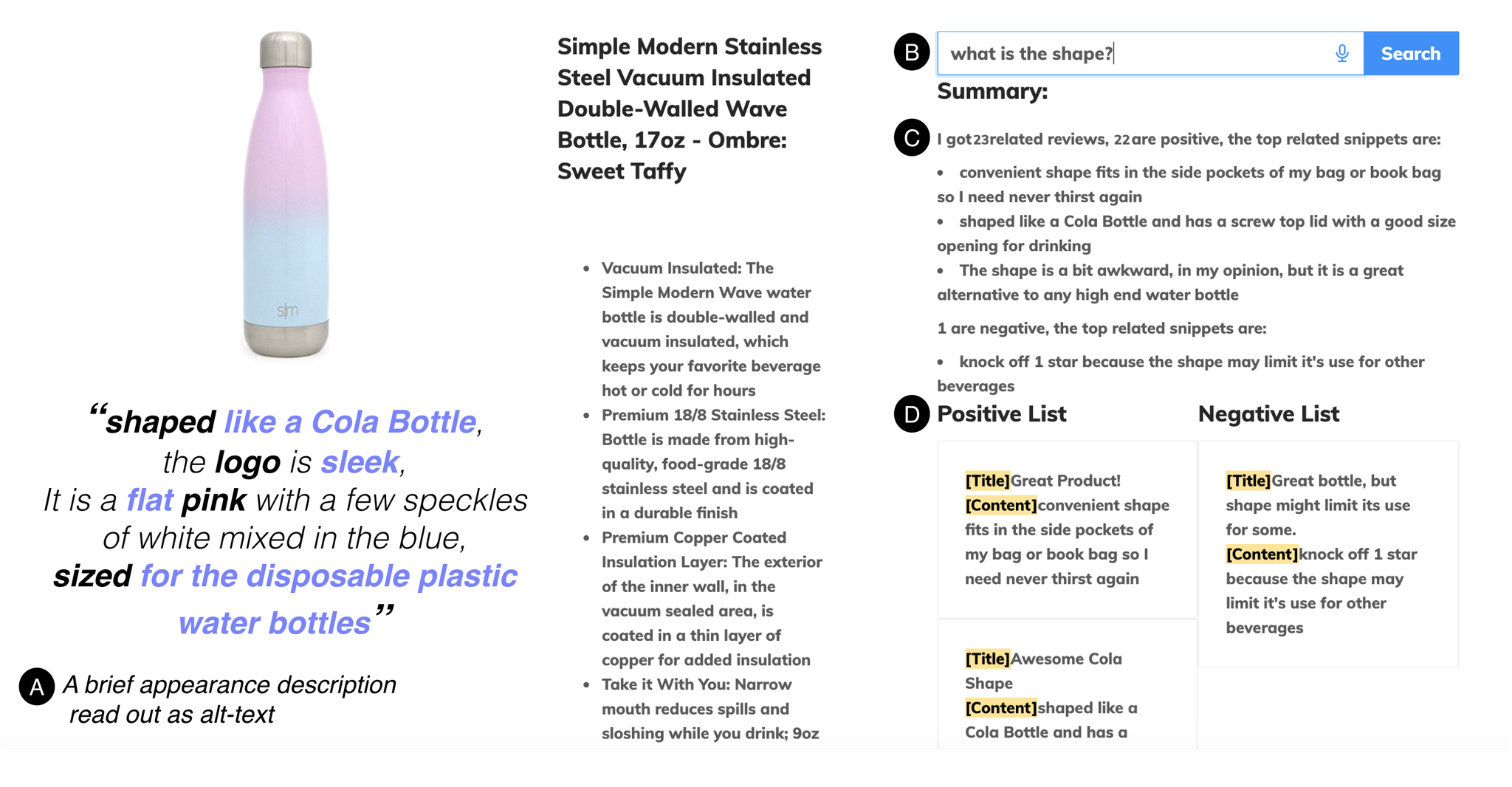

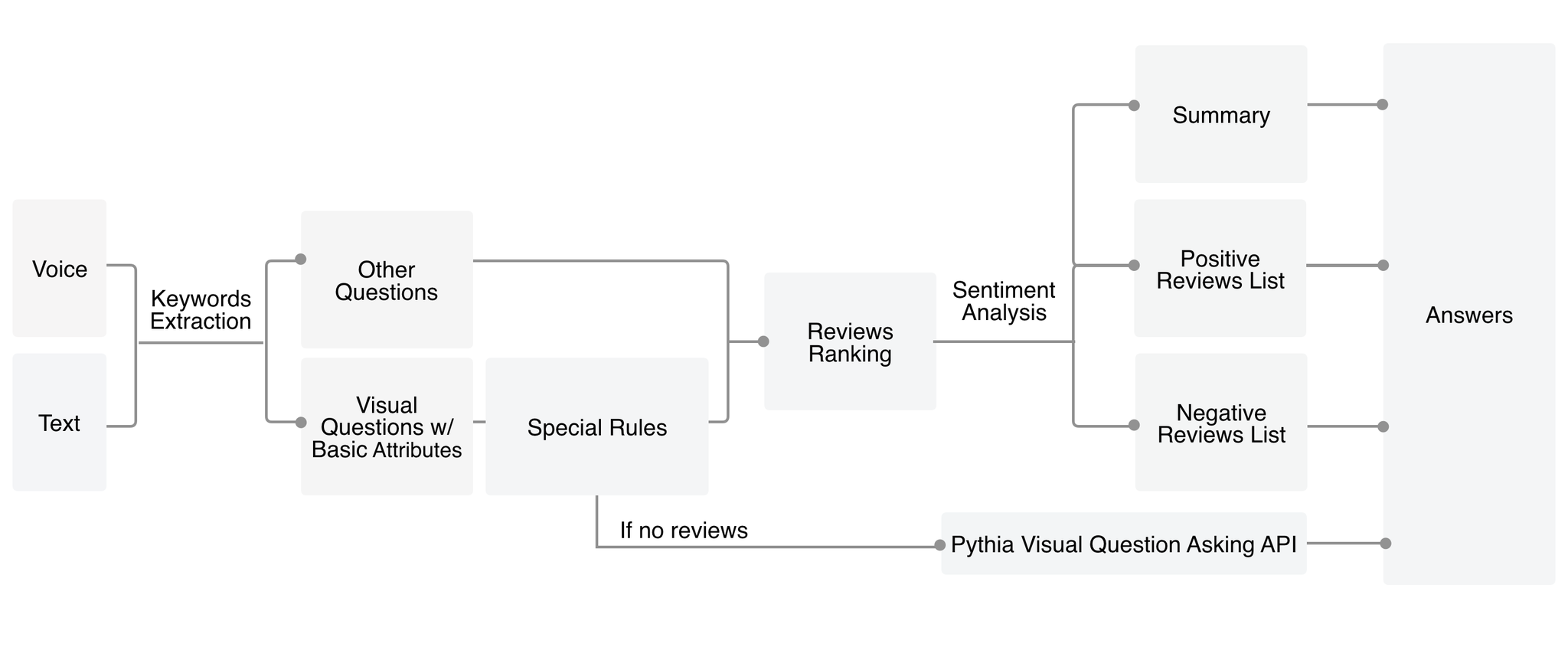

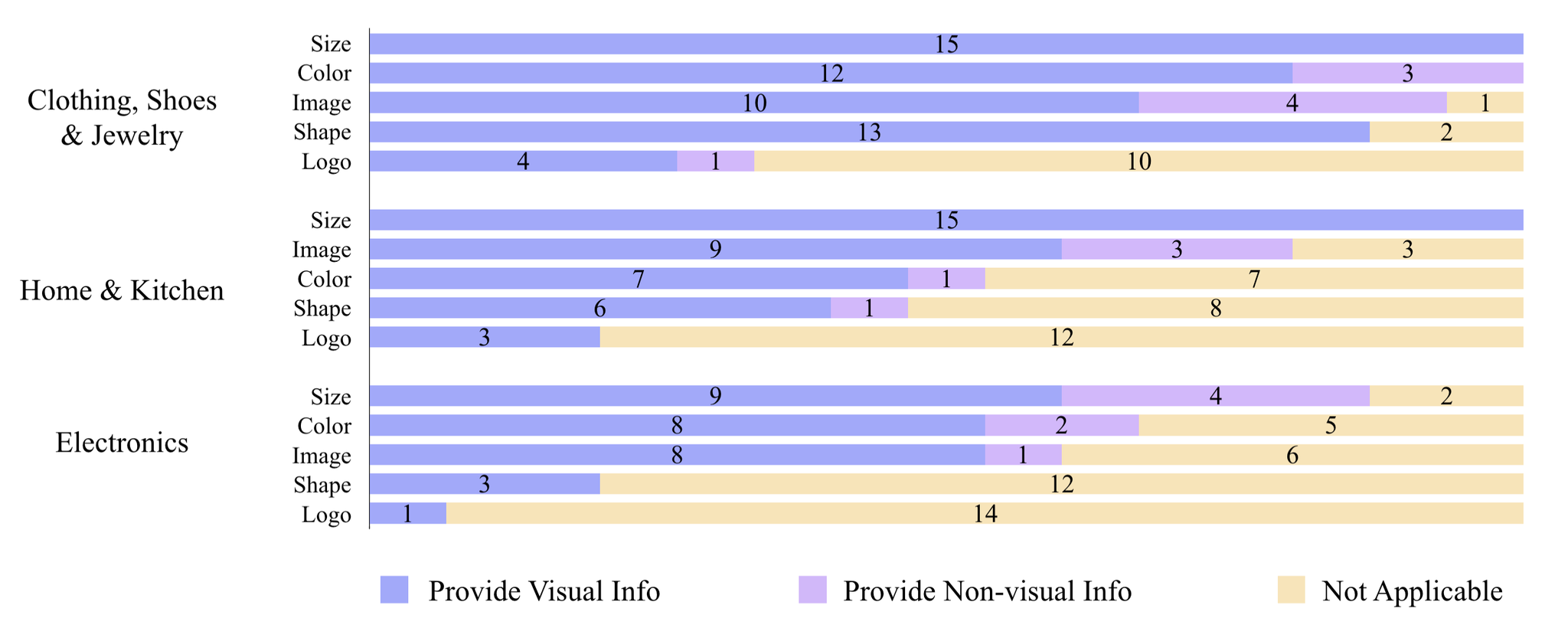

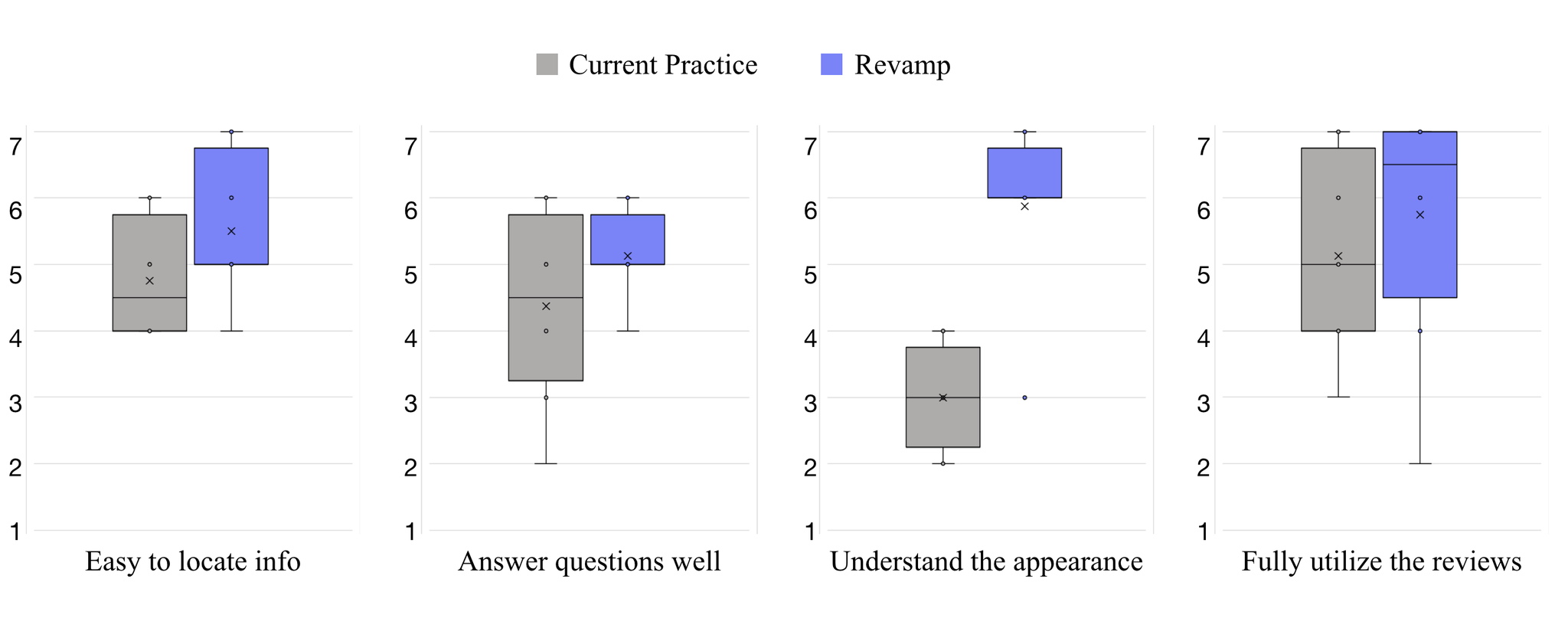

Online shopping has become a valuable modern convenience, but blind or low vision (BLV) users still face significant challenges using it, because of: 1) inadequate image descriptions and 2) the inability to filter large amounts of information using screen readers. To address those challenges, we propose Revamp, a system that leverages customer reviews for interactive information retrieval. Revamp is a browser integration that supports review-based question-answering interactions on a reconstructed product page. From our interview, we identified four main aspects (color, logo, shape, and size) that are vital for BLV users to understand the visual appearance of a product. Based on the findings, we formulated syntactic rules to extract review snippets, which were used to generate image descriptions and responses to users' queries. Evaluations with eight BLV users showed that Revamp 1) provided useful descriptive information for understanding product appearance and 2) helped the participants locate key information efficiently.

FULL CITATION

Ruolin Wang, Zixuan Chen, Mingrui Ray Zhang, Zhaoheng Li, Zhixiu Liu, Zihan Dang, Chun Yu, and Xiang 'Anthony' Chen. 2021. Revamp: Enhancing Accessible Information Seeking Experience of Online Shopping for Blind or Low Vision Users. In Proceedings of the 2021 CHI Conference on Human Factors in Computing Systems(CHI '21). Association for Computing Machinery, New York, NY, USA, Article 494, 1–14. DOI:https://doi.org/10.1145/3411764.3445547

Revamp: FREQUENTLY ASKED QUESTIONS

- How to extract the informative reviews for better understanding product appearances?

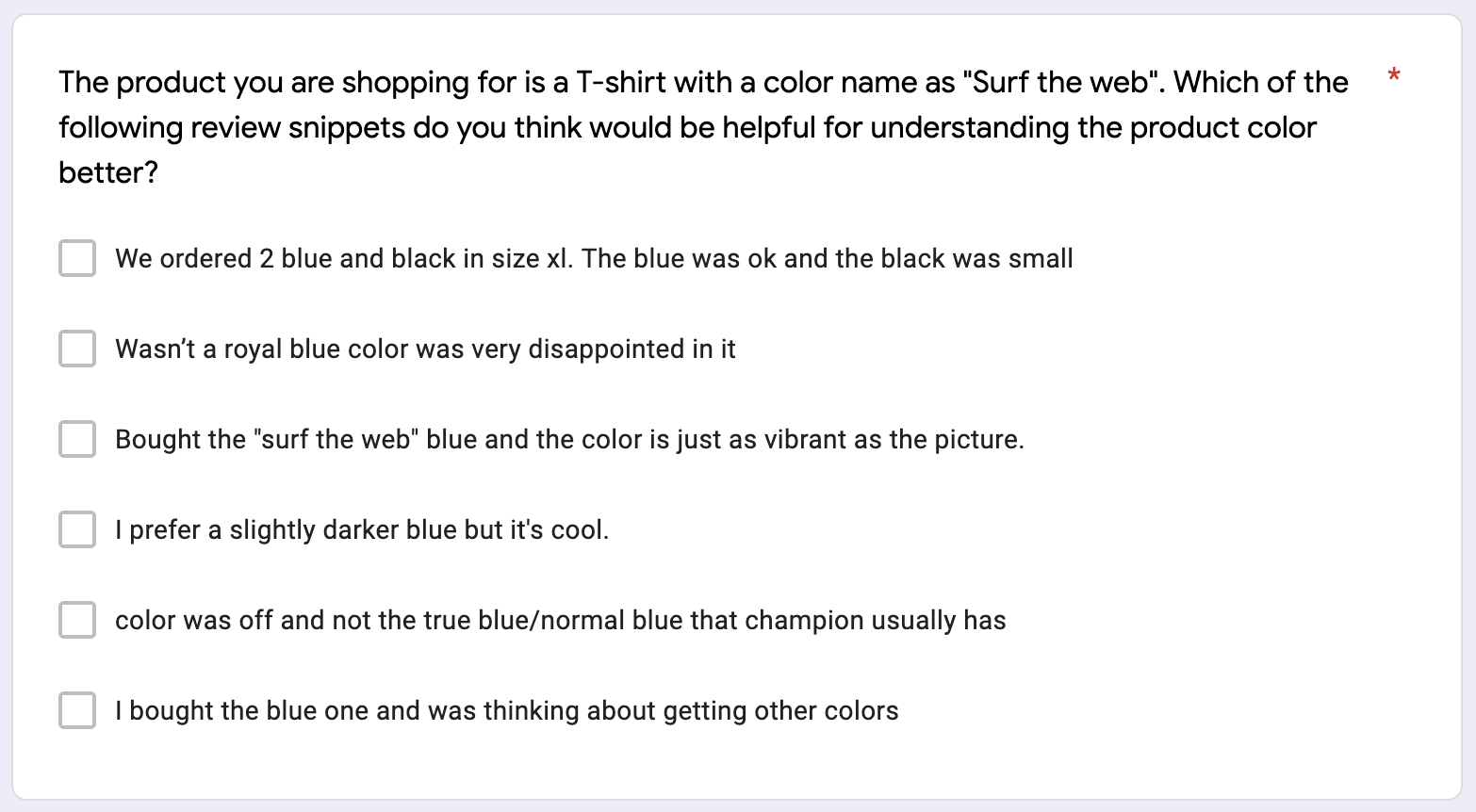

To answer this question, we first need a deeper understanding on what visual information are of special interests to BVI users, then we could further explore whether reviews could serve as informative sources to these queries or not. Based on the formative study, we found that BVI users' visual questions on online products could be divided into two categories: (i) visual attributes of image, e.g., color, logo, shape, size; and (ii) high-level concepts that can be inferred from an image, e.g., usage method, style. In this work, we focus on the four main visual attributes mentioned above, and the key is to extract the descriptive and comparative expressions from the reviews. We iteratively established three groups of syntactic rules as follows:

Rule 1: Adjective + Keyword or Keyword + Verb + Adjective. The descriptive adjectives usually provide supplementary visual information. e.g., “a shimmery purple", “crescent shape”. The evaluative adjectives expressing subjective emotions can be vague hence not helpful to further understand the visual attributes. e.g., “great color”.

Rule 2: 1st pronoun + ... + Keyword + ... + that/which/but/because. Rather than the simple sentences only containing expressions on attitude e.g., “I feel disappointed at the color.”, the sentences with clauses usually provide more detailed and useful information.

Rule 3: Comparative Expressions. (1) Keyword (shape) + “like/liked”, e.g., “shaped like a Cola Bottle”. Comparing the shape of a product with a familiar daily object can be helpful for better understanding the shape; (2) Keyword (size) + “fit/for/of”, e.g., “size fits in all cup holders”, shows reviews containing details on how the product fits in the settings are informative; (3) “than/more of” + Keyword (color), e.g., “it is a terra cotta than mocha”. Sighted customers complaining about this kind of difference between picture and the product can also be informative for better understanding the actual appearance.

- How could these syntactic rules be generalized to the products on Amazon? How about those products without many reviews?

- What are the take-aways for accessible information seeking? What lessons learnt from this project could be helpful for broader applications beyond the online shopping scenario?

Revamp: STORIES BEHIND RESEARCH

Revamp: Related Projects

- ......